The Google Meet Media API lets you access live RTP audio and video streams as well as control streams during a Google Meet call via WebRTC. Unlike the Google Meet REST API—which gives you post-call artifacts like transcripts and recordings—the Google Meet Media API gives you real-time data from Google Meet programmatically. You will not get transcripts or chat from this API.

Is Google Meet Media for you?

Ideal if:

- All meetings are on Google Meet

- You’re building internal tooling that needs data in real time

- You're comfortable implementing your own WebRTC stack and handling things like audio/video decoding and speaker detection using CSRC identifiers

Consider other options if:

- You need the transcript streamed; audio and video are streamed, but there’s no chat or transcript stream

- Your product needs to support multiple platforms (Google Meet Media is Google Meet specific)

- You can’t control the host domain (you/your users do not have access to admin controls for the workspace that controls the meeting)

- You want post-meeting artifacts like transcripts and recordings (use the Google Meet REST API for this)

- You’re on a tight timeline (the required scope approval and security assessment takes longer than a month)

- You need support for breakout rooms

If you want to know ways to get transcripts from Google Meet, check out all the APIs to get transcripts from Google Meet.

What data you can get from Google Meet Media

You can access audio and video streams and meeting metadata. Video streams support screenshare capture. No other streams are currently available.

Integrating with Google Meet Media

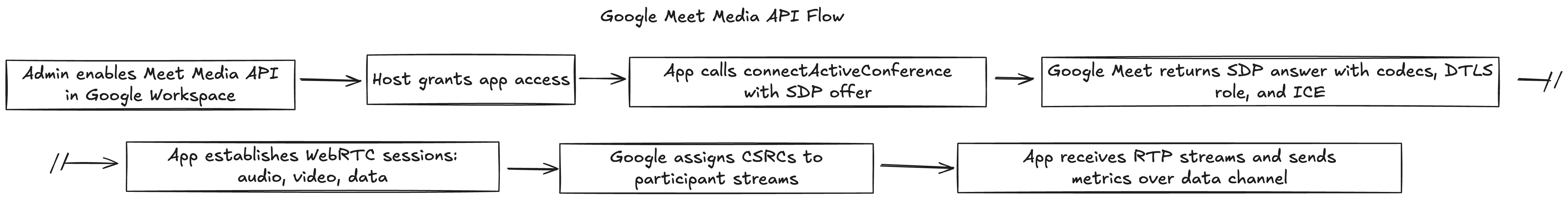

The Google Meet Media API uses standard WebRTC building blocks and data channels for metrics and control.

Google does provide a considerable amount of sample code to aid you in this process, or you can check out our sample app. Whether or not you are interested in using the sample apps above, the rest of this section can help you understand the code and requirements making it easier to build your own app using the sample code.

During session setup, Google Meet establishes required data channels used for tasks such as managing stream lifetimes, coordinating media routing, and exchanging control messages. These channels are also used to receive periodic getStats() results from your WebRTC stack—typically every 10 seconds—which serve as a heartbeat for stream health and metrics compliance.

Your implementation has to monitor channels in real time and, if you want video streams, you must send the video assignment once.

There’s no SDK or helper library. You are expected to implement or integrate a compliant WebRTC stack yourself, including:

- Session Description Protocol (SDP) offer/answer

- Interactive Connectivity Establishment (ICE) negotiation

- Codec negotiation and Real-time Transport Protocol (RTP) handling, including support for dynamic codec switching at runtime.

- Handling control and metrics communication over data channels

- Real-time metrics system to comply with

MediaStatsConfiguration. See the appendix for more details on MediaStatsConfiguration (getStats()) - Stream prioritization logic to handle stream limits (fallback to audio-only, degrade video, etc.)

- Connection monitoring (detect ICE disconnect, reconnection logic, handle mid-meeting revocations)

You’re operating at the transport layer of Google Meet. It requires deep familiarity with WebRTC internals, SDP structure, codec negotiation, and RTP packet handling. The codec negotiation is completed via the Google Meet REST API as part of the signaling process prior to initiating the WebRTC media session.

Google Meet Media requirements summary

- OAuth Scopes: Restricted scopes (

meetings.conference.media.readonly, etc.) -

Requires app verification and 4–7 week third-party security assessment

-

Codec support: AV1, VP8, VP9. Streams must conform to reference decoders (

libaomfor AV1,libvpxfor VP8/VP9); runtime decoding may use faster alternatives likedav1d. See the appendix for codec and RTP requirements. -

RTP Header Extensions:

Absolute Send Time,Absolute Capture Time,Audio Level, etc. See the appendix for RTP details. -

Virtual Stream Caps: 3 audio streams are created automatically, however, you can disable audio stream creation in the config. No video streams are created automatically, but clients can request up to 3 streams.

- Consent Flow: Host or co-host must approve app access in-meeting. If you are inviting others to a Google Meet via a calendar invite, third party apps must be enabled in the calendar event to receive data using Google Meet Media API. Users can also enable third party apps in

host controlsonce the meeting has started if the calendar invite had this setting disabled. If they do neither, the call toconnectActiveConferencewill fail. If a user revokes consent, the session terminates immediately. See the appendix for consent and join behaviors.

Not requirements, but best practices:

Bandwidth: ≥4 Mbps recommended for decoding full-quality streams

libwebrtc Versioning (Client-Side Requirement): Google Meet’s media stack uses the same WebRTC codebase as Chromium. Clients should:

- Use the official

libwebrtclibrary for SDP, ICE, Datagram Transport Layer Security-Secure Real-time Transport Protocol (DTLS), RTP, etc. - Ensure that their

libwebrtcversion is ≤ 12 months behind the latest stable Chromium release

Constraints & limitations

-

Encrypted or watermarked meetings are unsupported: If client-side encryption or watermarking is enabled, the Google Meet Media API connection is automatically rejected.

-

Host must be present in consumer account hosted meetings: While recording previously didn’t work for consumer accounts (

@gmail.com), Google now states that clients can capture meeting data using the Google Meet Media API if the host is present in Gmail-hosted meetings. For consumer account hosted meetings, your app can't connect until the host joins; otherwise, the connection silently fails. We couldn’t validate this due to developer preview restrictions and lack of consumer account access at the time of writing. -

All participants must be in developer preview program: Because Google Meet Media API is in developer preview, all participants must be in the developer preview program in order to capture any data from the participant (e.g. speech, video, screenshare, chat). If an app using Google Meet Media API is enabled prior to non-developer preview participants joining a meeting, all participants not in the developer preview program won’t be able to be heard by other participants that are in the developer preview program (which is pretty crazy). If you try to enable an app that uses Google Meet Media API during the meeting where any participant is not a part of the developer preview program, the app will fail to get data from the Meet Media API. As uncovered during our own testing, users can still join as a

viewerif they are not in the developer preview program. -

Consent is volatile: Anyone (even

viewerswho cannot share data) can revoke consent at any time, and the media stream will terminate immediately with no recovery mechanism provided by the platform. -

No built-in fallback or retry: Your app must detect disconnection, perform ICE restarts, and implement custom reconnect logic if you want to handle interruptions.

-

No access to post-meeting data: The Google Meet Media API does not provide recordings, transcripts, or attendance logs post meeting. You can composite the real-time video and audio streams during the meeting to create a recording yourself, however an easier method to get a recording is to use the Google Meet REST API.

For ways to get transcripts programmatically from Google Meet, check out our blog on all of the methods to get transcripts from Google Meet.

How Google Meet Media API compares to other options

Google Meet Media API vs Google Meet REST API

Google Meet’s REST API is designed for post-meeting data. It lets you download recordings, transcripts, and meeting metadata after the meeting ends (assuming that you’re a host or approved participant, and the meeting workspace is on an approved Google plan). As with the Google Meet Media API, you need restricted scopes (as well as sensitive scopes), leading to a long approval process.

This works well for anything that requires access to data post meeting, but not for live use cases. Both the Google Meet Media API and Google Meet Rest API are ideal for internal use cases. In the case of the Meet REST API, any user must be in a workspace that allows for use of the Meet REST API in order to get artifact access. Transcript and recording availability is delayed by a significant margin after a meeting ends, often approaching or exceeding the meeting's duration.

Google Meet Media API, by contrast, delivers real-time audio and video (including screensharing), making it suitable for building real-time apps.

Google Meet Media API vs Meeting Bots

Before the Meet Media API, the only way to access live Meet data was to build a bot that joins meetings—typically via a headless browser that acted like a participant and relayed audio/video streams to a backend. If you’re interested in that approach, we’ve open-sourced a bot that transcribes calls and documented it in a blog post.

Bots have one big advantage: they’re cross-platform and don’t depend on the host org's admin settings. But they come with real downsides when building:

- Maintenance related to bots joining the Google Meet in headless browsers, requiring orchestration of virtual displays, audio/video routing, and backend infrastructure for processing streams.

- Bots are visible in the meeting which is a user experience consideration.

Google Meet Media API gives you native, first-class access to the underlying realtime media streams without extra participants required.

The tradeoff: it’s Google Meet-only, and while in developer preview it only works if all participants are in the developer preview program.

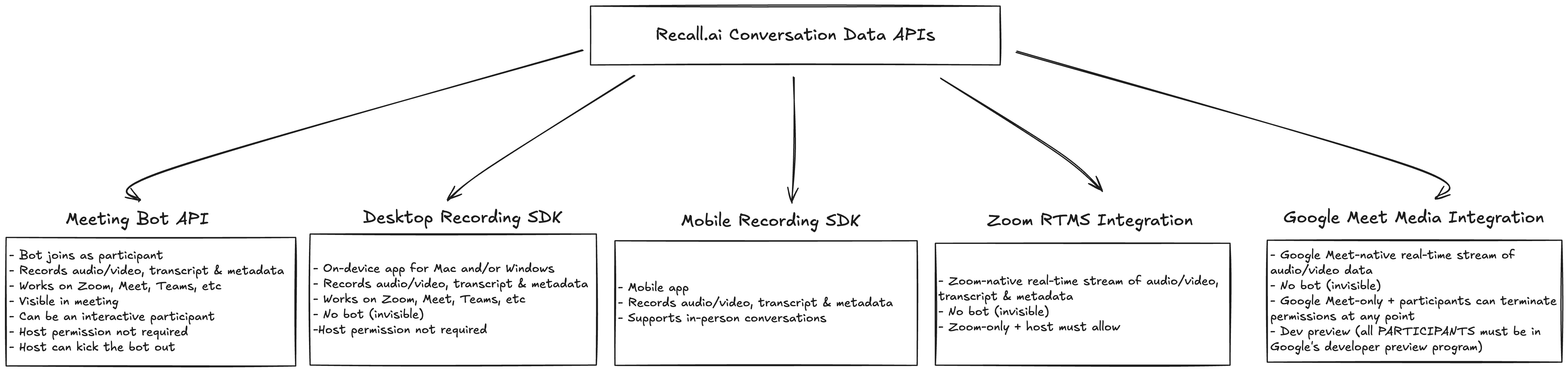

Google Meet Media API vs Recall.ai

Recall.ai provides a cross-platform API that lets you get recordings, transcripts and metadata from Google Meet, Microsoft Teams, Zoom and more through a single integration. It supports all form factors of meeting recording, including the Google Meet Media API, meeting bots, or an on-device desktop recording app.

Google Meet Media API is Google Meet-native, but requires several prerequisites from all meeting participants for it to work, and non-trivial engineering work.. Recall.ai offers the option to integrate Google Meet Media API in addition to other form factors.This ensures consistent behavior across meeting platforms and allows clients to fall back to other form factors in the event that Google Meet Media API cannot be used.

The options for gathering meeting data using Recall.ai are as follows:

Comparison Chart: Google Meet REST API vs Google Meet Media API vs Recall.ai

| Feature | REST API | Meet Media API | Recall.ai |

|---|---|---|---|

| Audio access | ✅ Only available post meeting. Must demux, video recording provided | ✅ Only real-time, raw RTP audio available during the meeting | ✅ Post meeting and real-time raw audio available |

| Video access | ✅ Available post-meeting. Video recording provided | ✅Available real-time; video streamed during meeting | ✅ Post meeting and real-time video available |

| Transcription access | ✅Available post-meeting. Transcript provided | ❌ Not available; Must pipe audio to SST to get transcript | ✅ Real-time and post-meeting transcript available |

| Works with encrypted/watermarked meetings | ✅ | ❌ Blocked entirely | ✅ |

| Setup complexity | Requires waiting for scope approvals, but implementation is easy | Requires waiting for scope approvals. Must implement WebRTC stack | No waiting. Single API or SDK install |

| OAuth scopes required | ✅Mix of sensitive and restricted scopes; requires audit | ✅Restricted scopes; requires audit | ❌No OAuth scopes required |

When the Google Meet Media API Makes Sense (And When It Doesn’t)

Use the Google Meet Media API if your product needs live access to audio, video, and participant metadata during meetings—and all participants are in the developer preview. Transcripts require sending audio to a service. You don’t mind waiting for scopes to be approved and permissions to be in place.

Use the Google Meet REST API if you don’t need real-time transcripts, recordings, or metadata. You’re optimizing for implementation simplicity. You don’t mind waiting for scopes to be approved and permissions to be in place.

Use Recall.ai if your product needs to work across Google Meet, Zoom, Microsoft Teams, and more. Provides a Google Meet Media integration, but also offers a Meeting Bot API or Desktop Recording SDK depending on the form factor you prefer. This is the fastest way to build cross-platform products.

To simplify using the Google Meet Media API, we’ve built a sample app and written docs that show how to use the Google Meet Media API through Recall.ai. This gives you native access to Google Meet Media while handling the heavy lifting—consent flow, metrics, reconnects, and WebRTC plumbing—behind a single API.

Happy building!

Appendix

Consent & Join Behavior

| Scenario | Behavior |

|---|---|

| Host didn't pre-authorize app | App is blocked from connecting |

| Host revoked access mid-call | Media stream is terminated immediately |

| App joins before host (in Gmail-hosted call) | Connection fails silently — no built-in retry mechanism |

| Meeting is encrypted or watermarked | Connection is rejected at signaling |

Example: If your bot joins a call before the Gmail-hosted organizer, your SDP offer will be ignored. You won’t get ICE candidates back. Your app must watch for this, wait for the host, and reinitiate the flow after consent is granted.

Metrics: MediaStats Requirements

Google enforces real-time metrics reporting via the media-stats data channel.

Google sends:

A MediaStatsConfiguration message with:

-

uploadIntervalSeconds: how often to report stats (e.g. every 10 seconds) -

allowlist: map of allowlisted fields (e.g.framesPerSecond,jitter,totalAudioEnergy)

You must:

-

Call

getStats()on eachRTCPeerConnection -

Filter stats by allowed keys

-

Serialize & send them via

UploadMediaStatsRequestover the data channel

//@title Example

const stats = await pc.getStats();

const allowedMetrics = ['framesPerSecond', 'jitter'];

const filtered = [...stats.values()]

.filter(report => report.type === 'inbound-rtp')

.map(r => ({

jitter: r.jitter,

framesPerSecond: r.framesPerSecond

}));

dataChannel.send(JSON.stringify({

kind: 'UploadMediaStatsRequest',

stats: filtered

}));

Failure to send media-stats can result in the connection dropping.

RTP & Codec Requirements

Google Meet is strict about media conformance. You'll need a compliant encoder/decoder and negotiation layer.

Video Codecs

| Codec | Notes |

|---|---|

| AV1 | Main profile. Bitstreams must conform to libaom output for compatibility. Runtime decoding may use dav1d for performance. |

| VP9 | Profile 0. Conformance based on libvpx behavior. |

| VP8 | Widely supported fallback |

Audio Codec

- Opus — required and expected

Google may require your implementation to decode pre-encoded test streams and match the output of the reference decoder. For AV1, this reference is libaom; for VP8 and VP9, it's libvpx.

RTP Details (Operational Requirements)

-

Resolution: 128×128 → 2880×1800

-

Frame rates: 1 – 30 fps

-

Bitrates: 30 kbps – 5 Mbps

-

Must support:

-

Scalability (SVC): spatial & temporal layers

-

Odd frame sizes (e.g. 641×361)

-

Must not use:

-

RTP padding

-

Frame size mismatch

Example: Sending VP9 at 640x360 @ 30 fps is valid. Sending a 641x361 VP8 stream with padding bits will be rejected by Google’s media stack.

Required WebRTC/RTP header extensions

Meet requires your WebRTC client to negotiate several RTP header extensions (via the WebRTC API). Google’s documentation lists:

| Extension | Purpose |

|---|---|

| Absolute Send Time | Enables playout delay estimation by marking when the packet was sent. |

| Transport-Wide Congestion Control | Supports congestion control by allowing receivers to report per-packet arrival times, enabling accurate bandwidth estimation. |

| Absolute Capture Time | Synchronizes audio/video across devices and participants by marking the exact time of media capture. |

| Dependency Descriptor | Describes frame dependencies for scalable video coding (e.g., VP9-SVC), enabling efficient stream adaptation. |

| Audio Level Indication | Reports the speaker's audio level (volume) for active speaker detection. |

Tip: Ensure these extensions are correctly included in your SDP offer and that your WebRTC stack actively supports them in outgoing RTP packets.

Codec Negotiation Example

Here’s an example of a valid video codec line in your SDP offer:

m=video 9 UDP/TLS/RTP/SAVPF 98 100 101

a=rtpmap:98 VP9/90000

a=rtpmap:100 VP8/90000

a=rtpmap:101 AV1/90000

To be accepted by Meet, the selected codec:

-

Must match the required payload type

-

Must support correct layering and resolution behavior

-

Cannot include proprietary extensions

Google also provides a great example in their docs.

WebRTC Stack Versioning (Best Practice)

Although not enforced, Google recommends:

-

Use the official libwebrtc implementation

-

Your version of libwebrtc should be ≤12 months behind current Chromium

Why? Google Meet evolves alongside Chromium WebRTC. If your stack diverges too much (e.g., using outdated SDP or ICE implementations), negotiation will break silently or cause degraded stream behavior. Google does ensure that when a new codec is released they will give notice 12 months before clients must support the new codec.

WebRTC Stack

The WebRTC stack consists of Session Description Protocol(SDP) negotiation, Interactive Connectivity Establishment (ICE) candidates, Datagram Transport Layer Security-Secure Real-time Transport Protocol (DTLS-SRTP), Real-time Transport Protocol (RTP) media streams. We highly encourage you to read up on these steps and look at sample code before building your own WebRTC stack.

Quick Glossary

SDP (Session Description Protocol)

- A text-based format that describes what each side supports (like video codecs, audio options, and encryption). Used to set up the rules of the media connection.

ICE (Interactive Connectivity Establishment)

- A process for figuring out how two computers can connect through the internet, even across NATs or firewalls. It tries different IP addresses and routes to find one that works.

DTLS-SRTP (Datagram Transport Layer Security-Secure Real-time Transport Protocol)

-

A two-part system for securing media:

-

DTLS: Sets up encryption keys

-

SRTP: Uses those keys to encrypt video/audio streams

-

RTP (Real-time Transport Protocol)

- The protocol that actually sends the audio and video. It's fast, works in real time, and supports things like timestamps and speaker IDs.