Building a tool that needs transcripts from Google Meet? This post breaks down every viable method to programmatically capture transcripts from Google Meet so you can pick the one that fits your infra, use case, and timeline.

You’ll find brief structured evaluations for each of the following options:

-

Headless Bot (Caption Scraping): Uses a bot running in a headless browser to join the meeting, enable captions, and extract transcript text from the DOM in real time.

-

Headless Bot (Audio to ASR): Uses a bot running in a headless browser that captures tab audio and streams it to an external automatic speech recognition (ASR) engine for transcription.

-

Chrome Extension (Caption Scraping): Injects a content script into the Meet tab to observe and extract captions directly from the page’s DOM.

-

Chrome Extension (Audio to ASR): Captures tab audio, which is streamed to an external ASR service for transcription.

-

Google Meet API: Fetches post-meeting artifacts such as transcripts and recordings via the official Google Meet API.

-

Desktop App: Captures system audio and sends it to a local or remote ASR engine for transcription OR scrapes captions.

For a high-level comparison of all options, including real-time support, transcription quality, diarization, cost, and engineering complexity, see the chart at the end of this guide.

If you're looking to get transcripts from Zoom as well, check out all the methods to get transcripts from Zoom.

Option 1: Bot-Based DOM Scraping of Google Meet Captions

Why you'd use this

You don't need an audio recording of the meeting and you're optimizing for speed and cost. You’re comfortable maintaining selectors as Google Meet changes its DOM and can tolerate imperfect transcripts.

How it works

A headless browser bot (via e.g. Playwright or Puppeteer) logs into a Google account, joins a Google Meet, and enables captions in the UI. It observes the caption container in the DOM to extract transcript updates as they appear. The captured text is streamed or stored as raw text transcripts.

Benefits

- Minimal setup complexity: No audio capture is needed.

- Resource-efficient: CPU-only workloads; no encoding or audio processing required.

- Works in real-time: Captions are immediately available as users speak. Can be streamed with sub-second latency.

Drawbacks

- Brittle to DOM changes: Google regularly ships UI updates. A minor frontend change (e.g., class name, aria role) can break your scraper.

- Captions-only accuracy: Caption text is lossy. Google paraphrases aggressively, truncates long phrases, and omits punctuation. While speaker labels are shown in the UI and now appear in the newer caption DOM structure, they aren’t embedded in a stable, machine-readable way. Parsing them still relies on fragile selectors or visual heuristics, making attribution lossy and error-prone.

- Captions must be manually enabled: The bot must click the UI toggle for captions, which may fail silently if selectors change or the page hasn't fully loaded.

- Single caption language at a time: Google Meet renders only one caption language concurrently; a bot can script menu toggles to switch languages, but there’s no automatic multi-language stream. Here’s a video to demonstrate the issue in practice. Video1

Who should use this?

You might opt for this path when you are building fast-turnaround prototypes, lightweight summarization pipelines, or real-time UI overlays and when perfect transcription is not critical.

How to build

If you're interested in this method, I've published a tutorial blog post of how to build a Google Meet bot that scrapes captions. I also open sourced the code for anyone who is curious.

If you're leaning toward a bot form factor but want to skip the engineering time to build and maintenance overheard, Recall.ai provides an API that handles all of the transcript, audio, video and metadata capture from Google Meet, Zoom, Microsoft Teams, and more.

Option 2: Headless Browser Bot → Audio → Transcription Provider

Why you'd use this

You need accurate transcription, speaker diarization, or multilingual support, none of which captions reliably provide. To support these requirements you capture full audio and pipe it to an Automatic Speech Recognition (ASR) engine. You prefer a bot form factor.

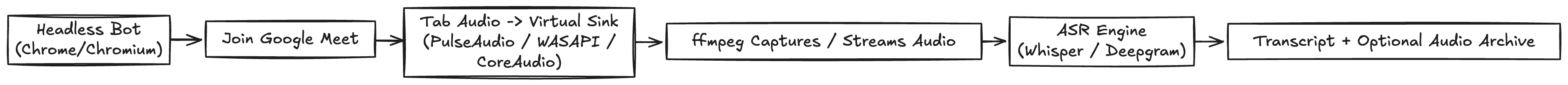

How it works

A headless browser bot joins the Google Meet using Chrome or Chromium. The browser’s tab audio is routed to a virtual sink (e.g., PulseAudio or snd-aloop). ffmpeg taps that sink for audio capture and records or streams the audio. The captured stream is fed into an ASR engine (e.g., Whisper, Deepgram) for transcription.

Benefits

- Accurate transcription: ASR engines can provide more accurate transcriptions than transcriptions that come from meeting captions due to their consideration of inflection, speaker overlap, and other acoustic cues. This allows them to punctuate more accurately, differentiate between speakers, account for accents, etc.

- Captions not required: Works even if captions are off or unavailable. It’s robust to UI or language changes.

Drawbacks

- Loopback sink is still required: You have to link the browser to a virtual/loopback sink to capture the browser’s audio output. A loopback device is a software device that captures the system’s audio output as input such as PulseAudio or CoreAudio. This adds setup complexity and introduces platform-specific dependencies that can be hard to debug.

- Debugging is non-obvious: Failures are silent. There's no audio indicator in logs or browser UI. Often, you'll only notice missing audio after analyzing file size, inspecting waveform outputs, or reviewing ASR transcripts that stop abruptly or drop segments.

- Container orchestration is required: Each headless browser bot joins a different meeting and outputs its own audio. To prevent audio from different sessions leaking into each other (cross-session contamination), you have to run each bot in an isolated environment with its own virtual audio sink and sound server. Deploying in Docker or Kubernetes requires using unique sockets, per-container virtual sinks, and strict namespace boundaries to prevent collisions or bleed-over between containers.

Who should use this?

Infra-focused teams who want a bot form factor and are prioritizing accuracy. Useful when transcription quality matters, such as for legal review, training data, or fine-tuned LLM pipelines. If you need multi-lingual transcripts, this is a solid option.

Option 3: Google Meet Caption Scraper Extension

Why you'd use this

You want a quick, browser-native way to extract captions from Google Meet without a bot. Ideal if you're building a tool that reacts to captions in real time and does not require perfect transcription, diarization, or multiple languages in the meeting tab itself.

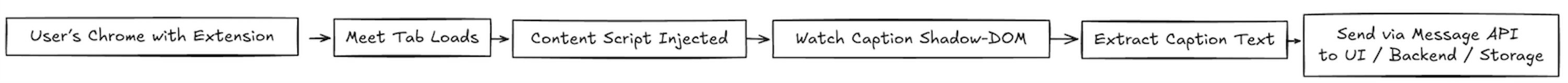

How it works

A Chrome extension runs in the context of the Google Meet tab, injecting a script that observes the DOM for caption changes. It reads the caption element and extracts captions as they appear. The extension can then expose the data to the frontend, store it locally, or send it via message passing or an external API/webhook.

Benefits

- No headless infra required: Everything runs in the browser. You don’t need a server, container, or bot.

- Simple to deploy for internal users: Users just install an extension. There’s no account management and no Google Meet login automation.

- Useful for real-time UI overlays: Because the extension runs inside the Google Meet tab, it can observe caption updates with low latency and inject custom UI directly into the page. This is useful for building overlays, live translation displays, accessibility tools, or other real-time augmentations to the Google Meet interface.

Drawbacks

- Needs user-granted capture permission: An extension can auto-open and join a Google Meet if cookies are present, but they still need explicit permission to read DOM content. This limits automation and may require user approval or enterprise exceptions.

- Limited automation/control: You can't programmatically control caption settings, meeting join, or page reloads. Extensions operate inside a single active tab.

- Permissions and review friction: Publishing to the Chrome Web Store or running extensions at scale (e.g., in enterprise environments) can raise compliance or security concerns. Permissions to read content from Google Meet must be declared and can raise flags.

- Captions-only accuracy: Subject to the same lossy issues as DOM scraping bots.

- Hard to diarize: Speaker labels are not embedded in caption text. In some layouts, speaker hints may appear in nearby DOM nodes, but inferring attribution is brittle and unreliable.

- Single caption language at a time: Meet renders only one caption language concurrently; switching languages must be done manually or scripted, and there's no official support for simultaneous multi-language caption streams.

- Does not work for all browsers: Even if you write your logic with WebExtension APIs, you’ll still need separate packaging if you want to make your extension compatible with Safari or Firefox.

- Limited to web-based usage: While extensions can work well for Google Meet, they’re far less practical for services like Zoom or Microsoft Teams, where most users rely on native desktop or mobile apps. Extensions can’t interact with captions outside the browser, making this approach ineffective for non-browser-based meeting platforms.

Who should use this?

This option is best for teams that want to avoid managing native apps or backend infrastructure. It works when users are already in Google Meet and can install and run a browser extension. This solution is appropriate for front-end overlays, real-time analysis, or light automation where infra constraints prohibit bot-based capture.

Option 4: Google Meet Audio Recorder Extension

Why you'd use this

You have access to an ASR and you need one or more of the following: higher quality transcription than captions can provide, speaker diarization, and/or support for multilingual meetings. The user will be present in the meeting. You want to capture audio without standing up headless infrastructure, and you're comfortable relying on browser-native APIs to access the audio stream.

How it works

A Chrome extension uses the Chrome Media Capture API to access audio from the current tab running Google Meet. The audio stream can be saved locally, sent to a backend, or fed into a live transcription pipeline. The extension must be triggered by a user gesture to comply with browser security policies.

Benefits

- Browser-native and self-contained: This method doesn’t require headless browser automation, containerization, or audio plumbing. It works entirely within Chrome.

- Lower technical barrier: It’s easier for front-end engineers to implement and deploy compared to bot-based or headless approaches.

Drawbacks

- Requires user presence and action: The extension cannot run without user interaction. The user must start capture by executing a gesture (e.g., clicking a button).

- Tab visibility required: The Google Meet tab must remain open and active to capture audio. Navigating away from the tab can pause or kill the stream.

- No automation of meeting join or interaction: The extension doesn’t control the meeting or UI. It only works within the context of a tab the user is actively on.

- Browser limitations / dual-mono stream: The browser’s tab-audio capture API returns stereo output, but Google Meet fills both channels with the same mixed audio (remote + local). Because you can’t capture separate speaker channels, an ASR engine must handle speaker separation and diarization.

- Limited to web-based usage: Like in Option 3, this approach can only work when the meeting is in the browser. It works well for Google Meet, but isn’t practical for services like Zoom or Microsoft Teams, where most users rely on native desktop or mobile apps. Browser extensions can’t interact with audio outside the browser, limiting coverage across platforms

Who should use this?

You might choose this approach if your product is a browser-first tool, a user is present throughout the meeting, and that user can grant capture permission. It works for teams that either feed the audio into a diarization-capable engine, are comfortable keeping up with DOM changes for speaker name recognition, or simply do not need speaker separation.

Option 5: Google Meet API

Why you'd use this

You don’t need real-time data and want a supported, managed way to access post-meeting transcripts, recordings, or attendee lists. You prefer a clean backend integration without scraping or headless bots.

How it works

Your app uses the Google Meet REST API, part of Google Workspace, to register for artifacts such as transcripts, recordings, and related metadata. After a call ends, you can fetch the available files. You can also subscribe to events like participant joins or media activity.

Benefits

- Fully supported by Google: Google provides stable API schemas and auth (OAuth + Workspace scopes).

- No scraping or bot infra required: It is a clean, sanctioned integration.

- Robust data access: Google provides post-meeting files such as transcripts and recordings, known as artifacts. It should be noted though that not all meetings include every file (e.g., recordings/transcripts must be enabled manually).

Drawbacks

- Post-meeting only: Transcripts are only available after the meeting ends. Real-time streaming isn’t available.

- Transcript delays can match or exceed meeting length: Google delays transcript availability by a significant margin after a meeting ends, often approaching or exceeding the meeting's duration. In our tests, this delay ranged from 7 minutes for a 1-minute meeting to 41 minutes for a 45-minute meeting. You can watch us test this transcript latency in a video. latency test

-

Restricted artifact access based on user and domain: Only meeting-space owners or invited participants who also have the necessary OAuth scopes and are part of the same Google Workspace as the host can retrieve artifacts.

Necessary OAuth scopes are:

To obtain the metadata, you need either:

https://www.googleapis.com/auth/meetings.space.created— Sensitive Scope ORhttps://www.googleapis.com/auth/meetings.space.readonly— Sensitive Scope

To obtain the transcript, you also need:

https://www.googleapis.com/auth/drive.meet.readonly— Restricted Scope

-

Slow time to market because of security assessment: Gaining access to Sensitive Scopes takes around a week because Google reviews sensitive data access through an OAuth consent screen verification process. Gaining access to Restricted Scopes takes 4-6 weeks due to the more laborious security assessment. Due to both needing to be completed, the total time will take anywhere from 4 - 7 weeks depending on how your organization schedules each assessment. It should also be noted that the security assessment for Restricted Scopes must be completed by an “independent security assessor” to whom you must pay a fee.

- API does not extend to other platforms: If you are building a cross-platform product (requiring integration with Zoom, Teams, etc), this API will not work for any other platform you might want to integrate with.

- Transcription or recording must be enabled: To generate transcripts or recordings, an admin must allow users in the Google Workspace to record/transcribe. Additionally, the meeting host or an invited participant must manually start transcription or recording during the call. If either of these do not happen, the corresponding artifacts will not be created. There is no automatic way to start recording/transcribing.

-

Not supported in breakout rooms: Transcription is currently not available in breakout rooms, meaning conversations held there will not be captured in the transcript artifact.

-

Transcript fails if storage is full: If the host’s Google Drive is full, or if the Workspace domain has exceeded the storage limit, any artifacts (transcripts, recordings, etc) will not be stored anywhere.

- Requires minimum level paid plan: Transcription and recording are only available on select Google Workspace tiers (e.g., Business Standard, Enterprise, Education Plus). That means your users must be on a paid plan to use this feature.

- Timestamps are limited in granularity: The transcript includes timestamps only every 5 minutes. This means that if a user tries to navigate to a specific moment in the audio or video by clicking on the transcript, they can only jump to the nearest 5-minute mark—not the exact point. As a result, it's difficult to locate precise segments of the recording. Additionally, speaker talk times and pacing cannot be accurately determined, since each 5-minute chunk may contain multiple speakers, and there's no breakdown of who spoke when within those intervals.

Who should use this?

You might choose this approach if your product only targets Google Meet, your users belong to the same Google Workspace domain as the meeting host, and you're building features that rely on official post-meeting artifacts rather than real-time data. This makes it ideal for purely internal async use cases.This might work for you if you’re comfortable navigating a multi-week OAuth approval process, can ensure both user and org-level Google Drive storage will always be available, and rely on transcription or recording being manually started by someone within the host’s domain. Additionally, you don’t need to accurately jump to specific points in a recording or measure speaker contributions with precision.

It should be noted that there is a new Meet API in developer preview, but that API does not yet support a transcription endpoint.

Option 6: Audio-Only Desktop App

Why you'd use this

You need high-quality audio for transcription, but want to avoid browser automation or headless infra. You prefer a less intrusive form factor. You're comfortable creating a consent flow to notify users that the conversation is being recorded or transcribed, since participants won’t automatically be informed—and certain jurisdictions require all-party consent (often called "two-party consent," though it applies to everyone involved). You’re building for users who can run an app locally and you have access to an ASR for transcription.

How it works

A desktop app runs on the user’s machine and captures system or browser audio using a loopback device (like BlackHole or VB-Cable) or ScreenCaptureKit on macOS, which acts as a virtual cable to redirect audio output back into the system as input. It detects the Google Meet tab or window, starts recording the looped-back audio, and optionally streams or stores the captured audio. The audio is processed by an ASR engine (Whisper, Deepgram, AssemblyAI, etc) to generate a diarized transcript.

Benefits

- Captions not required: The app runs independently of Google Meet's caption system, making it compatible across languages and browser settings.

- Platform agnostic output: The output pipeline works for any browser-based meeting platform, not just Google Meet.

Drawbacks

- Participant notification is on the app user: Since the audio capture happens locally and isn’t visible to other participants, it is up to the individual recording to notify participants that the meeting is being recorded. Legal consent requirements vary by jurisdiction.

- User installation required: Each participant who wants to record needs to install and run the desktop app for the recording to work.

- Driver setup can be blocked or complex: Most desktop apps rely on drivers that create loopback devices (like BlackHole or VB-Cable) to capture system audio. ScreenCaptureKit is an alternative for macOS 12 onward. On other operating systems, these drivers can be blocked by enterprise policies, require elevated permissions to install, or need platform-specific packaging and maintenance.

- Limited observability: Because the app captures system audio without tight integration into the meeting/browser, users won’t know if the audio capture failed until they review it after the call.

- No built-in speaker separation: A loopback device captures a single mixed audio stream, so the ASR chosen must support diarization to separate speakers. Though you can technically scrape the active speaker participant events from the DOM indicators, this is brittle as selectors will change.

Who should use this?

This method is ideal for teams building tools focused solely on gaining knowledge from meeting insights and who do not need output media. This form factor is especially useful in environments where meeting bots are considered intrusive.

If you're building a native app but want to avoid the pain of loopback setup, Recall.ai also offers a Desktop Recording SDK that captures transcripts with speaker names, audio and video directly from the user's device—no virtual audio drivers or bots needed.

Transcription outputs: Three approaches side-by-side

To test the transcription quality from these different approaches, I’ve gathered transcripts using a caption scraping bot (option 1), a bot that sends audio to a transcription provider (option 2), and Google Meet native transcription (option 5). I’ve shared the results below (abbreviated) and ran a comparison script on the unabbreviated outputs to compare each to the original text that I read.

I have not changed anything from the given transcripts except cutting their length to make reading them more digestible. All spelling, punctuation, timestamps, and diarization is exactly as was provided by the three transcription options.

| Captions Transcript (Bot) | ASR Transcript (Bot) | Google Meet API Transcript (No timestamps) |

|---|---|---|

| Maggie Veltri (0m 15s): A Princeton Classic Edition Walden 150th Anniversary edition. Henry D Through. Maggie Veltri (0m 22s): … Maggie Veltri (0m 27s): Originally published in 1854 Walden or Life in the Woods is a vivid. Account of the time that Henry Dethrow lived alone in a secluded cabin at Walden Pond… Maggie Veltri (0m 58s): Other famous sections, involve the rose visits with a Canadian woodcutter and with an Irish family, a trip to Concord and a description of his bean field. Maggie Veltri (1m 9s): … Maggie Veltri (1m 16s): … this is the ideal presentation of Thoreau's, great document of social criticism, and dissent. Maggie Veltri (1m 26s): John Updike is a Pulitzer Prize-winning. Novelist short story writer and poet internationally known for his novels Rabbit. Run Rabbit, Redux Rabbit Is Rich and Rabbit at Rest. He lives in rural Massachusetts. |

Maggie Veltri (3m 0s): A Princeton Classic edition Walden 150th anniversary edition. Maggie Veltri (3m 5s): Henry D. Maggie Veltri (3m 6s): Throughout, … originally published in 1854\. Maggie Veltri (3m 14s): Walden, or life in the woods, is a vivid account of the time that Henry. Maggie Veltri (3m 20s): D. Maggie Veltri (3m 20s): Thoreau, lived alone in a secluded cabin at Walden Pond. Maggie Veltri (3m 47s): Other famous sections involve thorough's visits with the canadian woodcutter and with an irish family, a trip to Concord, and a description of his bean field. Maggie Veltri (3m 58s): … Maggie Veltri (4m 8s): … this is the ideal presentation of Thoreau's great document of social criticism and dissent. Maggie Veltri (4m 15s): John Updyke is a Pulitzer Prize winning novelist, short story writer and poet internationally known for his novels Rabbit run Rabbit Redux. Maggie Veltri (4m 23s): Rabbit is rich and rabbit at rest. Maggie Veltri (4m 26s): He lives in rural. Maggie Veltri (4m 28s): Massachusetts. |

Maggie Veltri: A Princeton classic Walden 150th anniversary edition. Henry Dththero … Originally published in 1854\. Maggie Veltri: Walden or life in the woods is a vivid account of the time that Henry Dorougho lived alone in a secluded cabin at Walden Pond.Other famous sections involve the visits with a Canadian wood cutter and with an Irish family, a trip to conquered and a description of his bean field… Maggie Veltri: This is the ideal presentation of Theorough's great document of social criticism and disscent. John Updike is a Pulitzer Prizewinning novelist, short story writer, and poet internationally known for his novels Rabbit Run, Rabbit Redux, Rabbit is Rich, and Rabbit at Rest. He lives in rural Massachusetts. |

These three examples are based on reading the 150th edition of Thoreau’s Walden. The text can be found here:

A Princeton Classic Edition Walden 150th Anniversary Edition Henry D. Thoreau Edited by Lyndon Shanley with an introduction by John Updike

Originally published in 1854, Walden, or Life in the Woods, is a vivid account of the time that Henry D. Thoreau lived alone in a secluded cabin at Walden Pond. It is one of the most influential and compelling books in American literature.

This new paperback edition-introduced by noted American writer John Updike celebrates the 150th anniversary of this classic work. Much of Walden's material is derived from Thoreau's journals and contains such engaging pieces as "Reading" and "The Pond in the Winter." Other famous sections involve Thoreau's visits with a Canadian woodcutter and with an Irish family, a trip to Concord, and a description of his bean field. This is the complete and authoritative text of Walden—as close to Thoreau's original intention as all available evidence allows.

For the student and for the general reader, this is the ideal presentation of Thoreau's great document of social criticism and dissent.

John Updike is a Pulitzer Prize-winning novelist, short story writer, and poet, internationally known for his novels Rabbit, Run, Rabbit Redux, Rabbit is Rich, and Rabbit at Rest. He lives in rural Massachusetts.

We found that the Google Meet version was closest to the original at 85.71% accuracy, the ASR was second closest at 83.09% accuracy and the meeting captions option was 82.04% accurate.

However, since none of these transcription methods are fully deterministic, these numbers should not be interpreted as definitive accuracy scores for each option. The results might also vary depending on the specific diction, language of the transcribed meeting, and accent of the speaker.

Comparison Chart: Options at a Glance

If you're comparing options quickly, here’s how they line up across real-time transcription, transcription quality, cost, diarization, audio capture, multilingual support, and infrastructure complexity.

| Option | Transcription Quality | Real-time Transcription | Diarization | Supports Multilingual | Can Capture Audio | Typical Cost** | Engineering Complexity |

|---|---|---|---|---|---|---|---|

| DOM Scraping Bot | Limited to quality of meeting captions | ✅ | ✅ Meeting captions have 100% accurate diarization | ❌ | ❌ | There is a variable cost per bot to run on a server | Medium |

| Audio Bot + ASR | Variable: Depends on the ASR you use | ✅ | ✅ ASR machine diarization | ✅ | ✅ | Relatively high cost due to cost for ASR and infra to run each bot | High |

| Chrome Extension: DOM | Limited to quality of meeting captions | ✅ | ✅ Meeting captions have 100% accurate diarization | ❌ | ❌ | Very low | Medium |

| Chrome Extension: Audio | Variable: Depends on the ASR you use | ✅ | ✅ ASR machine diarization | ✅ | ✅ | Low to Medium (Cost for the ASR varies depending on ASR chosen) | Medium |

| Google Meet API | Comparable to meeting captions, however, timestamps are every 5 minutes. | ❌ | ✅ Comparable to ASR machine diarization | ❌ | ✅ Possible if you also enable recording during the meeting | Low (Requires eligible Google Workspace plan; storage demands may be significant for transcripts and recordings) | Low |

| Desktop App | Dependent on choice of ASR or meeting captions (user has ultimate flexibility) | ✅, but run post-meeting for simplicity | ✅ ASR machine diarization | ✅ If using ASR | ✅ | Low to Medium (local ASR) | High |

| Recall.ai | Dependent on ASR or meeting captions quality (user has ultimate flexibility) | ✅ | ✅ Supports 100% accurate diarization with speaker names | ✅ | ✅ | Low to Medium (Usage- Based charge depending on volume commitment) | Low |

** Typical Cost reflects ongoing variable charges (infrastructure + API minutes), not engineering time.

Some Parting Words

Every approach in this guide comes with tradeoffs. Some options to get transcripts from Google Meet meetings prioritize transcription quality, others simplicity or real-time access. The best choice depends on what you're building, how much control you want, and how much complexity you're willing to take on.

If you're just exploring, I hope this guide gave you a clearer map of the territory. If you’re looking to capture meeting data—audio, video, transcripts, and metadata—Recall.ai offers a unified API for meeting bots, that works across Google Meet, Zoom, Teams and more, without the hassle of maintaining brittle bots or scraping infrastructure. We also provide a Desktop Recording SDK that lets you capture meeting data in a desktop app form factor.

You can get started now with our API docs, or create an account to try us out for free. Or, if you're exploring more complex workflows and would like more hands-on support, book a demo to see any of our products in action. We'll always shoot you straight, even if the best path means building your own.

Some common questions

Can I get real-time transcripts from Google Meet meetings?

Yes. With options 1-4 and option 6 outlined above you can get real-time transcripts. You can also get real-time transcripts with Recall.ai's Meeting Bot API and Desktop Recording SDK.

Is there a Google Meet transcript API that will give me transcripts in real-time?

There is not a GA native API to get transcripts in real-time from Google. The Recall.ai Meeting Bot API and Desktop Recording SDK will give you transcripts in real-time. The Google Meet Media API is not in GA and has no estimated release date at time of publish. As mentioned, you can use Recall.ai's Google Meet API for transcription.

What is the difference between a Google Meet transcript API and Meet transcript API?

There’s no difference. As mentioned above, Google doesn’t provide a direct API for retrieving transcripts from Meet. Instead, transcripts are accessible through Drive API endpoints, which require special permissions and involve a lengthy approval process, including an audit with both time and financial costs.

How do I build a Google Meet bot to get transcripts from Google Meet from Scratch?

You can follow our tutorial on how to build a Google Meet bot from scratch to get an open source Google Meet bot.

Is there an API to get a transcript from Google Meet?

You can use the Google Drive API if the meeting took place on a supported tier and a participant turned on transcritpion for the meeting. If you want to get the transcript in real-time then the Recall.ai Meeting Bot API and Desktop Recording SDK will give you transcripts in real-time. As mentioned, you can use Recall.ai's Google Meet API for transcription.