Zoom’s Real‑Time Media Streams (RTMS) is a WebSocket‑based streaming API built to provide live access to media like meeting audio, video, transcripts, and participant events as they occur. Unlike Zoom’s traditional REST APIs, which are asynchronous and post-meeting, RTMS lets you hook into Zoom’s media pipeline to receive live data, down to individual video frames, transcription, and speaker-change notifications. RTMS is still a newer product so supported features and aspects of the protocol may change.

This guide is written for engineers, PMs, and technical leaders evaluating RTMS. It covers what RTMS is, how it works, where it shines, and where its constraints show up in real end user experiences.

If you’re interested in learning more about what the experience will look like for your end users, check out our blog on the end user experience with RTMS.

RTMS Cheat Sheet

- Availability: Zoom RTMS is in General Availability (GA). You can contact Zoom to inquire about RTMS access for your account.

- Pricing: RTMS is a paid feature available via Zoom Developer Pack. No public pricing is provided, you must talk to the Zoom sales team.

- No Bots Required: RTMS provides in-meeting access without bot participants.

- Platform Support: RTMS is only available for Zoom. To integrate with other meeting platforms such as Google Meet or Microsoft Teams, use Recall.ai, which also provides RTMS as an integration option for Zoom.

Data you can access through RTMS

- Audio & Video: Raw audio packets + real-time video as JPG, PNG, or H.264

- Transcripts: Live transcripts

- Metadata: Join/leave notices, speaker changes, screenshare events

Use cases that RTMS enables

- Live transcription or live translations

- AI copilots that work mid-conversation

- Real-time dashboards tracking attendance dynamics

These examples were previously only achievable by building a bot to join the meeting or building a desktop app to record system-level audio. RTMS provides them natively within Zoom.

If you want to know other ways to get transcripts from Zoom besides RTMS, check out all the APIs to get transcripts from Zoom and if you’re interested in recordings we’ve written a similar guide going over how to get recordings from Zoom. You can combine RTMS with other capture approaches to ensure a seamless experience across different video conferencing platforms. If you're interested in also capturing meetings outside of Zoom, we’ll cover how Recall.ai supports RTMS alongside bots and other form factors later in this guide.

What data you can get from RTMS

You can access transcripts, audio, video, and meeting metadata. However, if you’d like a full list of the data that you can get, Zoom has published a data type definitions page in addition to the types they have in the RTMS repo. For more information on the media formats and latency, see the appendix.

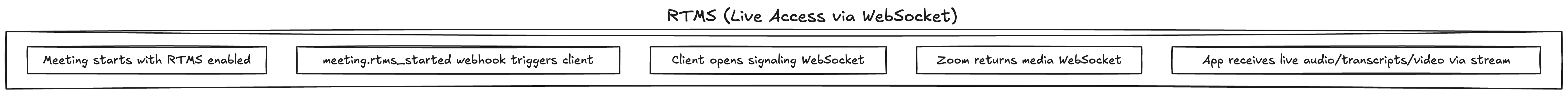

How RTMS integrates with a meeting

To get started with RTMS, your organization must first have access to RTMS and have it enabled at the account level. Once that's in place, you'll need to register a General App in the Zoom Marketplace, ensuring that RTMS-related scopes (like meeting:rtms:read) and meeting-related scopes are included under both Scopes and Access. You can find setup instructions in the Zoom RTMS tutorial section.

After your app is registered and configured in the Zoom UI, you’re ready to implement the real-time connection logic that powers RTMS. This unfolds in two key phases: signaling and media streaming.

-

Signaling WebSocket: After enabling RTMS at the account level and subscribing to the

meeting.rtms_startedwebhook, your backend establishes a WebSocket “signaling” connection to Zoom. This handles authentication and negotiates which types of streams, such as audio, video, captions, or participant events, your client will receive. -

Media WebSocket: Once signaling succeeds, Zoom returns a second WebSocket endpoint streaming any or all of the below:

- Raw audio frames and speaker-separated audio channels

- Live raw video

- Transcript and live captions

- Join/leave events and speaker-change notifications

Your client must handle heartbeats, timeouts, and reconnections. Any data that isn’t captured during disconnects is gone forever.

If you’re looking for a deeper dive into how authentication works check out the appendix.

What to take note of when building with RTMS

Who actually controls whether RTMS starts?

| Decision authority | What they must do for RTMS to start |

|---|---|

| Zoom admin of host org | Enable RTMS and allow apps to access meeting content. If this is disabled, RTMS will never work for meetings hosted by the organization. |

| Meeting host | Approve real-time sharing and use a supported Zoom client. The host can also block RTMS entirely, pause it, or interrupt RTMS mid-meeting. |

| Your app | Request only necessary media, use correct scopes, and handle interruptions gracefully. App design can reduce failure cases by attempting to fall back to other methods of data capture, but cannot override host or admin decisions. |

| Participants | If using RTMS reliant app, have an updated Zoom client (6.5.5+). Participants cannot enable RTMS, disable it, or opt out individually. The only impact that a non-host participant can have on RTMS working is the Zoom client version they are running in the event that they attempt to start an app that relies on RTMS. |

- Admin and Host Permissions: RTMS must be enabled by a Zoom admin at the account, group, or user level in the Zoom Web Portal; otherwise, it won’t function, even if your app is set up with the correct scopes/access. By default RTMS is enabled. If the host’s org has disabled sharing data an app that depends on RTMS will simply not receive any data.

This means if an end user joins an external meeting in which they are not the host and the host doesn’t enable RTMS, anything reliant exclusively on RTMS will fail.

-

Account Setup & App Registration: The app must be registered as a General App in the Zoom Marketplace. Server-to-Server OAuth App and Webhook App won’t work. WebSockets require a General App configuration. The RTMS scopes and access must be selected in the Zoom App Marketplace UI.

zoom dev app creation -

Client Compatibility: End users must be using up-to-date Zoom clients (Zoom client 6.5.5+) in order to approve RTMS access if they are the host and in order to attempt to use an app that relies on RTMS.

-

Host Controls: Hosts can enable Require host approval for sharing real-time meeting content. If this is on:

-

Non-host RTMS requests will either be paused or blocked until approved.

-

If the host never joins, participants can’t enable RTMS. They may see a request message, but access will never be granted.

-

All-or-Nothing Access: RTMS sharing is binary: while clients can choose to ask for specific media (eg. audio, transcript, etc), hosts cannot grant access selectively (e.g., audio only, not video or transcripts). There is no partial-content control.

-

Host Transitions: If the original host leaves and the new host has sharing disabled on their account or manually disables sharing, your RTMS session is interrupted. The WebSocket will be closed, requiring a new

meeting.rtms_startedevent and fresh connection after a valid host with permissions joins. The sharing settings on free plans automatically keep RTMS alive, so there will be no disruption if the new host is on a free plan. -

Pricing & Quotas: You’ll need to consult your Zoom Sales or other contacts for such information. RTMS is currently part of the “Zoom Developer Pack”, but specific pricing requires reaching out to a Zoom sales rep.

-

Auto-Start & Webhook Setup: RTMS startup behavior depends heavily on admin configuration and webhook setup. Admin or group settings must enable auto-start under:

settings -> allow apps to access meeting content -> edit -> toggle auto-start on. Alternatively, you can also start RTMS via the REST API. You can also start RTMS using the JS SDK. Additionally, your end users can manually start RTMS using the button in-app. You must subscribe to themeeting.rtms_startedwebhook to be notified when a meeting is RTMS-ready, triggering your signaling WebSocket handshake.

Note: If the host has not yet joined, or the designated alternate host isn’t present, any attempt to start the stream via API or SDK by another participant will fail. RTMS requires an active, eligible host in the meeting to grant access. The host can start Zoom meetings without joining. In the event that a host does allow a meeting to start without them being present, the host’s app will capture data if RTMS is set to auto-start.

-

WebSocket Auth & Session Lifecycle: RTMS WebSockets use a one-time HMAC-SHA256 signature for authentication, not OAuth. If your connection drops and the session was not found, you cannot resume the session or rotate credentials. Instead, you must reinitiate the handshake.

-

Bidirectional not supported: RTMS functions as a receive-only API, with the only exception being control packets (handshake and keep-alive). It gives you live access to in-meeting audio, video, transcripts, and events, but you cannot send media back into the meeting through RTMS meaning your app cannot post in the chat, speak, or interact with participants in real time. If you want to send media back into the meeting, using a meeting bot is the only option currently available to send data back into a meeting.

-

RTMS in Breakout Rooms

Zoom has no officially documented RTMS support for breakout rooms. If your use case involves capturing media from breakout sessions, reach out to Zoom and test carefully before deploying. Our testing has found that RTMS in breakout rooms is not supported currently, but this may evolve over time. If you want to capture media from breakout rooms, using a meeting bot is the only option currently available to send data back into a meeting.

Zoom has stated that RTMS supports thousands of concurrent streams, but for an official limit, check with Zoom.

How RTMS compares to other options

RTMS vs Zoom REST API

Zoom’s REST API is designed for meeting management and post-meeting data. It includes endpoints for scheduling, managing participants, and downloading artifacts like recordings and transcripts after the meeting ends. Webhooks notify you of lifecycle events like start, end, or transcript completion.

This works well for dashboards, reports, or post-hoc analysis, but not for live use cases. Transcript and recording availability is delayed by a significant margin after a meeting ends, often approaching or exceeding the meeting's duration.

RTMS, by contrast, delivers real-time audio, video, and metadata, making it suitable for real-time suggestions and monitoring. However, it can’t schedule meetings or manage end users, you’ll still need the REST API for that.

RTMS vs Zoom Bots

Before RTMS, accessing live Zoom data required bots that joined meetings as an additional participant. These bots streamed audio/video to backend servers for transcription or analysis.

Bots support cross-platform use and don’t depend on host org settings, but:

- Building a bot in-house is complex to maintain, due to bots joining via the Zoom web client in headless browsers, requiring orchestration of virtual displays, audio/video routing, and backend infrastructure for processing streams.

- Bots are visible in the meeting which is an end user experience consideration.

RTMS removes the need for bots and offers real-time data access via a Zoom-native API.

The tradeoff: it’s Zoom-only, and only works if the meeting host's org has enabled it and the host allows it.

RTMS with Recall.ai

Recall.ai provides a cross-platform API that unifies access to Zoom, Google Meet, Microsoft Teams, Webex, Slack and GoTo Meeting through a single integration. It lets you access recordings, transcripts, and metadata across all platforms, through the form factor of your choosing - whether that’s RTMS, meeting bots, an on-device desktop app, or mobile recording app.

RTMS is Zoom-native, but requires specific setup and cannot be guaranteed to work if you’re in meetings happening where you do not have host status. Recall.ai offers the option to integrate RTMS in addition to other form factors. This ensures consistent behavior across platforms and meeting environments.

The options for gathering meeting data using Recall.ai are as follows:

Form Factors for Recording Zoom Comparison Chart

| Feature | Zoom REST API | RTMS (Available through Zoom or Recall.ai) | Meeting bots (Available through Recall.ai) |

|---|---|---|---|

| Schedule Meetings | ✅ | ❌ | ❌ |

| Attendance Logs | ✅ Attendance logs available post-meeting | ✅ Participant join and leave events | ✅ Available via live events or post-meeting |

| Audio Capture | ✅ Recording available post-meeting | ✅ Delivered via raw live audio packets | ✅ Real-time audio and async recording available |

| Live Transcripts | ❌ | ✅ | ✅Real-time and post-meeting transcripts available |

| Speaker Diarization | ✅ Speaker diarization available post-meeting | ✅ Live speaker-change events available | ✅ Speaker diarization available in real-time over webhook, or available via API post-meeting |

| Latency for transcripts to be available after the meeting | High (minutes+) | Low (milliseconds to seconds) | Low (milliseconds to seconds) |

| Platform Support | Zoom only | Zoom only | Zoom, Google Meet, Microsoft Teams, Webex, Slack Huddles, GoTo Meeting, In-person meetings, and more. Check out the full list of supported platforms. |

Note: This chart compares the most commonly used form factors for recording Zoom meetings at the time of publication. For information on other form factors to record meetings, such as desktop recording, feel free to reach out to the Recall.ai team.

When to use what

- Use the Zoom REST API if all the meetings you want data from are hosted on Zoom and you don’t need data in real time.You or someone from your organization are the host of the meetings you want data for. REST is ideal for dashboards, attendance logs, or delayed analytics where real-time delivery isn't required.

- Use RTMS if all of the meetings that you want data from are hosted on Zoom. You need live access to transcripts, audio, video, or participant events during meetings. RTMS is best suited for live transcription or translation, mid-conversation AI copilots, or dashboards that update in real time. Access to data depends on meetings being hosted by organizations with RTMS enabled, or by free accounts where RTMS is correctly configured. Since RTMS doesn’t work across account boundaries unless the host permits access, it's not reliable for open or external-facing use cases on its own.

- Use meeting bots when your product needs to support multiple platforms (Google Meet and Microsoft Teams, etc) and needs to guarantee consistent behavior regardless of admin settings or client versions. When you want to send data back into the meeting, meeting bots are the only option.

- Use Recall.ai when you want access to data no matter what video conferencing platform the meeting takes place on. Recall.ai allows you to take advantage of RTMS when it is available. Recall.ai is the best choice when you want RTMS, coverage on other video conferencing platforms, and support for recording in-person meetings.

If you’re still mulling over whether RTMS makes sense for you or have decided that one of the other options is a better fit, we’re happy to help.

Book a chat with a teammate, or sign up for an account, and see you soon!

Appendix

Authentication

RTMS WebSocket connections are authenticated using a custom signature, not OAuth tokens. When initiating the signaling handshake, your client must include a signed payload using HMAC-SHA256:

HMACSHA256(client_id + "," + meeting_uuid + "," + rtms_stream_id, client_secret)

This signature, sent in the handshake message, authorizes the session and is valid only for that specific stream instance.

- This is static authentication so you don’t need to refresh tokens during the session.

- You must keep your client secret secure, as it's used to generate the signature and access raw media data.

Also note that if the host leaves and a new host lacks RTMS permissions, Zoom will close the WebSocket. Your app must wait for a new meeting.rtms_started webhook and then reconnect using a new signature to resume the stream.

Media formats & latency expectations

RTMS delivers the audio, video, transcript data and more over WebSockets as lightweight, structured packets. Each stream is customizable via handshake parameters, and the latency and fidelity of the media vary based on format and configuration.

Below is an example of a Media handshake request for the audio. For other media types see the Zoom documentation on connecting media streams

{

"msg_type": DATA_HAND_SHAKE_REQ,

"protocol_version": 1,

"sequence": 0,

"meeting_uuid": "YOUR_MEETING_ID",

"rtms_stream_id": "YOUR_RTMS_STREAM_ID",

"signature": "YOUR_AUTH_SIGNATURE_CREATED_WITH_APP_CREDS_AND_MTG_INFO",

"media_type": AUDIO,

// If you do not specify any of the media_params, default values will be set

“Media_params”: {

"audio": {

"content_type": RAW_AUDIO,

"sample_rate": SR_16K,

"channel": MONO,

"codec": G722,

"data_opt": AUDIO_MIXED_STREAM,

"send_rate": 20

}

}

}

Audio is sent as uncompressed PCM (L16) by default, with a sample rate of 16kHz (though 8kHz, 32kHz, and 48kHz are also supported) and mono or stereo channel options. The default delivery interval is 20 milliseconds, but this can be configured up to 1000 milliseconds in 20 millisecond intervals depending on use case.

Video is sent as JPG or PNG for lower frame rates (≤5 FPS), and H.264 for higher frame rates (>5 FPS). Resolution options include SD, HD, FHD, and QHD, with delivery latency depending on network, resolution, and compression per frame and a max frames per second of 30.

Transcripts arrive in real-time as UTF-8 text and include speaker attribution, language metadata, and timestamps. RTMS currently supports 37 spoken languages. Transcript messages are sent as soon as speech is detected and processed.

Developers can fine-tune stream frequency, fidelity, and codec preferences using the media_params section of the media handshake request.