Tl;dr: I built a Zoom bot from scratch and I’ll be showing you exactly how I did it.

This bot will:

- Parse a Zoom URL

- Join a Zoom meeting

- Produce a live transcript from the meeting

If you’re more interested in the code, you can find it in this Github repository. If you’re more interested in Google Meet, check out our Google Meet bot from scratch guide instead.

I have spent more time thinking about meeting bots than any reasonable person should. That sounds deranged, I know, but there’s a good reason for this.

I’m a Developer Experience engineer at Recall.ai, and one of our main products is an API for meeting bots. It’s part of my job to know every facet of this product so that I can help developers succeed on our platform.

But despite all of this time and focus, it struck me recently that I actually know very little about the fundamental technology that makes our bots work. I know that building and scaling meeting bot infrastructure is incredibly hard, but I've never actually experienced this pain firsthand. For me, the only way to truly understand this was to get my hands dirty and implement a bot myself. This article explores my learnings and struggles as I built my own Zoom bot.

A sneak peek at the end result

https://www.loom.com/share/ab898f02a5344fdbb89fdd4701bbaf10

My criteria for a successful implementation

There’s a shocking amount of complexity involved in building a full-featured meeting bot, so I knew I needed to scope this project down for it to be doable.

I decided to build a Zoom bot that could transcribe calls in real time and display them in a user-facing application. The setup would be absolutely minimal, with the fewest dependencies possible. I also gave myself an ambitious deadline: one week to build the entire thing.

Architecting the Zoom bot

How I chose my approach

Approach 1: Zoom Meeting SDK

Zoom actually has a Meeting SDK specifically to help developers build meeting bots, so this seemed like a logical place to start. However, I quickly learned that Zoom’s Meeting SDK doesn’t allow you to consume the transcript directly from the meeting. To transcribe live meetings with a bot built on Zoom’s Meeting SDK, I would need to capture the raw audio from the meeting and pipe it to a transcription service. This introduced a ton of extra complexity, so I quickly ruled out using Zoom’s official meeting bot kit.

I also learned that bots built on the Meeting SDK need to go through a rigorous approval process before they can even join external Zoom meetings. It can take between 4 to 6 weeks to get the application approved.

Lastly, bots built on the Meeting SDK can only record if they receive permission from the meeting host, and there are a myriad of account-level settings that can prevent the bot from ever requesting recording permission in the first place.

Approach 2: Browser Automation

Instead, I decided I would build a meeting bot using browser automation. In short, this entails spinning up a headless browser instance and programmatically controlling it to join a Zoom meeting through Zoom’s web interface.

Instead of extracting the raw audio from the meeting and piping it to an AI transcription model, I could lean on Zoom to do the heavy lifting. Every Zoom meeting has the option to enable closed captions, which leverage Zoom’s own transcription models and are completely free. Using the browser automation approach, I could have the bot scrape the captions directly from the Zoom meeting. This approach allowed me to build a bot that pulls Zoom transcripts in real time.

My meeting bot architecture

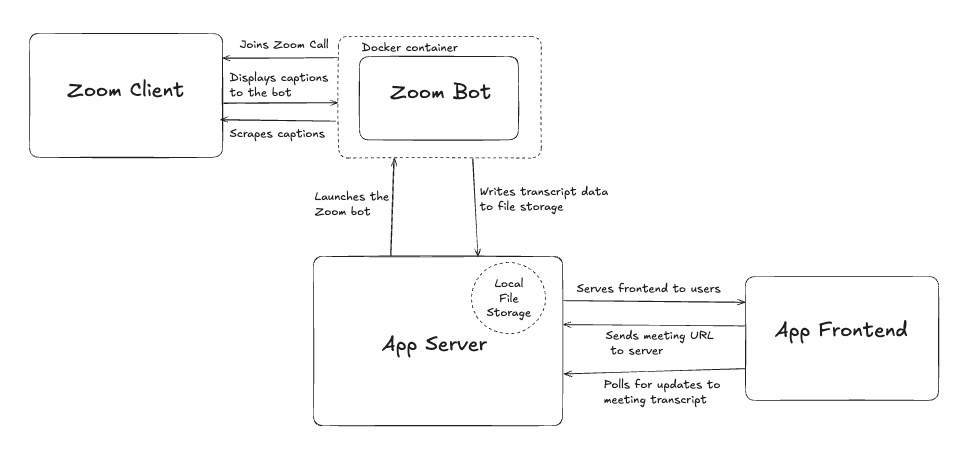

This is not going to be a production-ready application, and I've kept the architecture deliberately simple so it's as easy as possible to understand what's going on. The system has three main components:

Frontend: Vanilla HTML/CSS/JS for basic UI and displaying transcript data

Server: Express.js handling bot orchestration and serving transcript data

Bots: Playwright instances running in isolated Docker containers, each one joining a single meeting

You may notice that I never mentioned a database. That’s because I don’t have one! The meeting bots in my implementation write transcript data directly to files in a shared Docker volume on the server, and the server just reads from these files. This is super convenient for development since it cuts out an extra piece of infrastructure, but it’s absolutely not scalable. The good news is that it should be incredibly easy to replace this system with the database of our choice when the time comes.

Here’s a little more detail about how the implementation works end-to-end:

User flow: Users visit a webpage served by the Express server and paste in a Zoom meeting URL. The server runs some basic validation to make sure it's actually a Zoom URL, then spins up a fresh Docker container with a unique bot ID. Each meeting gets its own isolated container running a Playwright instance.

Data flow: The bot writes transcript data to a shared file system that's mounted between the host machine and the Docker container. Meanwhile, the frontend polls the Express server every few seconds for updates, and the server reads the latest transcript data from the file and sends it back.

Data processing: The bot applies some additional logic to correctly attribute words to the correct speaker. I also apply post-processing and deduplication logic on the captions data to clean up the information being received from the webpage.

A note on Playwright

If you're not already familiar with Playwright, it's an automation library that lets you programmatically control web browsers.

For this project, Playwright is perfect because Zoom's web client is a complex JavaScript application with tons of dynamic elements. I need something that can handle popups, wait for elements to load, and interact with the page just like a real user would. Playwright gives us all of that, plus the ability to run in "headless" mode (no visible browser window) which is essential for running bots in Docker containers on our server.

During development, you can also switch to Playwright’s "headful" mode to watch the browser automation happen in a visible window. This is both fun to watch and incredibly helpful for debugging when your bot gets stuck somewhere it shouldn’t.

How I built the Zoom bot

https://www.loom.com/share/dac093d179244754b52ae468f0de80e2

I’ve told you about the tools and architecture that I chose, now I’ll show you exactly how I used these to build my Zoom bot, starting from the moment it tries to enter a meeting.

Step 1: Pre-processing the Zoom URL

When someone shares a Zoom meeting link, it usually looks something like this: https://zoom.us/j/1234567890. If you click this link in a regular browser, Zoom will try to detect whether you have the desktop app installed and prompt you to either launch or download the app.

This creates a problem for our bot. I need it to join through the web client (since that's what Playwright can control), but Zoom keeps trying to push us toward the desktop app. The solution is to convert the meeting URL from the /j/ format to the /wc/join/ format, which forces Zoom to use the web client. This function takes a standard Zoom join URL and converts it to the web client version:

function toWebClient(url) {

const m = url.match(/(https:\\/\\/[^/]+)\\/j\\/(\\d+)\\?(.*)$/);

if (!m) return url; // already wc/join

const [, host, id, query] = m;

return `${host}/wc/join/${id}?${query}`;

}

There are actually a ton of other Zoom formats that I’m not specifically handling with this regex (e.g. webinar links, vanity links, personal meeting room links), but the existing regex does cover a large subset of Zoom meetings.

Step 2: Figuring out which selectors to use

When the bot joins the meeting, it needs to know how to interact with the page and click the correct buttons. Finding the right selectors for your bot to click is actually pretty straightforward with the right tools. This was my process whenever I needed to figure out which elements the bot needed to interact with:

- Launch the bot in headful mode - This shows the actual browser window so you can see exactly what your bot sees. Just set

headless: falsein your Playwright config. - Add a debug breakpoint - Drop an

await page.pause()right before the interaction you're trying to figure out. This freezes execution and opens Playwright's inspector. - Use Playwright's inspector tools - While the page is paused, you can:

* Hover over any element to see its selector options

* Click the "Pick locator" button to point at elements and get their selectors

* Try out selectors in the console withpage.locator()to make sure they work

* Use the "Record" button to capture your manual clicks and generate code

The inspector even shows you multiple selector strategies (by role, by text, by CSS) and lets you pick the most reliable one. Pro tip: prefer role-based selectors like getByRole("button", { name: "Mute" }) over CSS selectors - they're more resilient to UI changes.

This exploratory approach made it incredibly easy for me to dig through the Zoom web client and grab the exact selectors I needed.

Step 3: Making the bot join the meeting

Once the bot joins the correct URL, it needs to behave like a polite meeting participant. It mutes its audio, turns off video, and enters its name. This is straightforward to handle. I just tell the bot to click the buttons that turn its audio and video off, and have it fill in its name.

await page.goto(toWebClient(origUrl), { waitUntil: "domcontentloaded" });

// mute audio, hide video

await page.waitForTimeout(4000); // wait for buttons to load

await page.getByRole("button", { name: /mute/i }).click();

await page.getByRole("button", { name: /stop video/i }).click();

await page.getByRole("textbox", { name: /your name/i }).fill("🤖FRIENDLY BOT DO NOT BE ALARMED");

await page.keyboard.press("Enter");

But here's where things get a bit more complicated. The bot might join the meeting instantly, or get trapped in a waiting room if the host has them enabled. I need to know the bot’s state at all times, so we’ll account for this in the code:

// waiting room behavior

const waitingBanner = page.locator(

"text=/waiting for the host|host has joined|will let you in soon/i"

);

const inMeetingButton = page.getByRole("button", {

name: /mute my microphone/i,

});

transition(

await Promise.race([

waitingBanner

.waitFor({ timeout: 15_000 })

.then(() => BotState.IN_WAITING_ROOM),

inMeetingButton

.waitFor({ timeout: 15_000 })

.then(() => BotState.IN_CALL),

])

);

// start waiting room timeout if we're in the waiting room

if (state === BotState.IN_WAITING_ROOM) {

console.log(`⏳ host absent; will wait ${waitRoomLimitMs / 60000} min`);

await Promise.race([

inMeetingButton.waitFor({ timeout: waitRoomLimitMs }),

new Promise((_, rej) =>

setTimeout(

() => rej(new Error("waiting_room_timeout")),

waitRoomLimitMs

)

),

]);

transition(BotState.IN_CALL);

}

The bot waits to see which scenario it's in: either it gets admitted immediately (and sees the mute button), or it gets stuck in the waiting room (and sees the waiting room banner). If it's in the waiting room, the bot will patiently wait for a configurable amount of time before exiting the call.

This kind of conditional logic is one reason why building meeting bots is more complex than it initially appears. You're not just joining a meeting - you're navigating all the different states and edge cases that real users encounter.

Step 4: Scraping speaker names

Now that my bot is in the meeting, I need it to start scraping the captions. However, before the bot can do this, I needed to solve a frustrating problem with how Zoom displays speaker labels. When captions appear in the meeting, they show a little profile picture or avatar next to each line of text, but the HTML doesn't actually include the speaker's name anywhere. The only thing that’s shown is either the image or the initials of the speaker.

This means I can see that someone is speaking, but I have no idea who is speaking unless I can map those avatars back to actual participant names. To solve this, I need to build a mapping between profile pictures/initials and participant names by scraping the participants list.

async function startParticipantObserver(page) {

const participantsButton = page.getByRole("button", {

name: "open the participants list",

});

await participantsButton.dblclick();

await page.evaluate(() => {

if (window.__participantMapObserver) return;

window.__participantMap = {};

const buildMap = () => {

const map = {};

const items = document.querySelectorAll(

".participants-item__item-layout"

);

items.forEach((item) => {

const nameEl = item.querySelector(".participants-item__display-name");

if (!nameEl) return;

const displayName = nameEl.textContent.trim();

const avatar = item.querySelector(".participants-item__avatar");

if (!avatar) return;

let key = "";

if (avatar.tagName === "IMG") {

key = avatar.src;

} else {

key = avatar.textContent.trim(); }

if (key) map[key] = displayName;

});

window.__participantMap = map;

};

// Initial build and monitor for changes.

buildMap();

const listContainer = document.querySelector(

".ReactVirtualized__Grid__innerScrollContainer[role='rowgroup']"

);

if (!listContainer) {

console.warn(

"[participants] list container not found – open participant panel?"

);

return;

}

const obs = new MutationObserver(() => buildMap());

obs.observe(listContainer, { childList: true, subtree: true });

window.__participantMapObserver = obs;

});

}

This function opens the participants panel and creates a mapping between avatar identifiers (either image URLs for profile pictures or text content for initials like "AS") and the actual display names. It also sets up a MutationObserver to keep this mapping updated as people join the meeting. Later, when the bot is scraping captions and sees an avatar, it can look it up in this map to figure out who's actually speaking. It's a bit of a hack, but it's the only way to get proper speaker diarization since Zoom doesn't give us the names directly in the caption HTML.

Step 5: Getting a live transcript by scraping captions

Scraping captions from the Zoom meeting was by far the hardest part of this project. I spent a disproportionately long time trying to pull these into a reliable format. The issue I ran into is that Zoom’s captions are a bit unpredictable, which makes it very hard to keep an accurate picture of the transcript. Here’s a primer on how they work:

- Each speaker gets their own caption container that appears and disappears dynamically.

- The text within the container updates incrementally, adding words as they’re spoken.

- Older words slide off the beginning as new ones are added.

For example, watching a single sentence evolve looks like this:

"Hello everyone"

"Hello everyone, welcome to"

"Hello everyone, welcome to the meeting"

"welcome to the meeting, today we'll"

Notice how "Hello everyone" disappeared in the last update? This sliding window behavior means the bot can't just grab the latest text. Instead, I need to track what's new versus what we’ve already seen.

I tried a bunch of different approaches to deduplicating these captions in the browser, but all of them failed, producing tons of duplicate text and making the transcript unusable. After a ton of trial and error, I finally decided on a simpler approach. My browser-side code doesn’t even try to deduplicate the text. It just watches for any text changes and sends everything to the server:

const captionContainers = document.querySelectorAll('[class*=live-transcription]');

captionContainers.forEach(container => {

const observer = new MutationObserver(() => {

const captionSpans = container.querySelectorAll('.live-transcription-subtitle__item');

captionSpans.forEach(span => {

const currentText = span.innerText.trim();

// Send ALL text to server, let it figure out what's new

console.log(`CAPTION: ${JSON.stringify({speaker, text: currentText, time})}`);

});

});

observer.observe(container, {

childList: true,

subtree: true,

characterData: true

});

});

The server then handles the messy work of deduplication. It maintains a buffer of the last seen text for each speaker and extracts only the new portions:

const findNewText = (oldText, newText) => {

// Simple append: "Hello" → "Hello world"

if (newText.startsWith(oldText)) {

return newText.substring(oldText.length).trim();

}

// Sliding window: find the overlap

for (let i = 1; i <= oldText.length; i++) {

const suffix = oldText.substring(oldText.length - i);

if (newText.startsWith(suffix)) {

return newText.substring(suffix.length).trim();

}

}

// Completely new text

return newText;

};

Speaker identification happens by looking up the avatar image or initials shown next to each caption against the participant map I built earlier. Each caption gets logged with the speaker name, the text, and a timestamp relative to when the bot joined the call. This allows the bot to produce captions in the following format:

{"speaker":"Aydin","text":"Oh wait I think it's working","time":1.46} {"speaker":"Aydin","text":"I am so glad","time":6.8}

...

The bot saves these transcript fragments to the server’s file system as they’re generated, so that they’re available to be queried almost immediately.

The approach I’m using is likely not the best way of scraping captions. It still feels fragile, and I can’t help but feel that there’s probably a simpler and better way of doing this. I’ve also noticed that the bot still occasionally produces duplicate transcript lines despite my best efforts at removing these.

I’m chalking this up to my lack of familiarity with Playwright, but I definitely would love to see what a better and more maintainable implementation of a Zoom caption scraper looks like.

Bonus thoughts: Gotchas and debugging

Building this bot taught me several lessons that I wish I'd known from the start. I’ll try to save you some time by letting you know about these up front.

Headful vs. Headless mode

Code that works perfectly in headful mode (with a visible browser) can break mysteriously in headless mode. Some UI elements render differently when there's no viewport, causing selectors to fail. Headful mode is great for development and debugging, but always make sure that your code works in headless mode since this is what you’ll be using in production.

Handling UI changes

Meeting platforms frequently update their interfaces and run A/B tests on their users. Don’t have selectors that need to match a full sentence (e.g. “You will be let in from the waiting room”), since this is very likely to break if the platform changes something. Use partial word matches or find another property that’s more stable to avoid this. The maintenance overhead of keeping up with UI changes is a major reason companies use Recall.ai for their Zoom meeting bots instead of building their own.

Debug video

Playwright's video recording feature (video: 'on' in your context options) is invaluable for debugging headless runs. When the bot fails in production, these recordings reveal issues that logs miss entirely, like UI elements overlapping or unexpected popups appearing. Even during development, I caught issues this way that would have been nearly impossible to diagnose otherwise.

Lying to Zoom (fake mic / camera)

I found that I couldn’t get the bot to join in headless mode without giving it a “fake” audio and video output through Playwright. The challenge is that if the mute/disable video selectors fail to actually turn off the bot’s audio and video (which happens more than you'd think), the bot joins the meeting emitting a loud test tone that will definitely get everyone's attention.

Building vs. Scaling: Two very different problems

To be honest, building my Zoom bot was actually a lot of fun. Messing around with Playwright selectors and watching my bot successfully click through all the hurdles and popups presented by Zoom felt like solving a puzzle. If I were only using this bot for my own personal meetings, I think that it could actually work pretty well. I also like that this approach is completely free, and that I have full ownership of the code. It’s open-source if you want to take a look.

To recap, I had a few requirements with this project, and I was able to accomplish them all:

- [x] Join calls and navigate different states (joining, waiting room, in call, call ended)

- [x] Scrape captions from the meeting in real time

- [x] Display captions in a user-facing application

It really wasn’t that hard. So why does everyone say that meeting bot infrastructure is so difficult?

Why my bot won’t scale

My bot looks pretty nice in a demo, but there’s a mountain of issues and edge cases that I would need to consider before even thinking about calling it production-ready.

The first and most obvious issue to me is the bot’s reliance on selector names. My bot depends on specific CSS classes and DOM structures that Zoom can change at any time. If they push an update that renames .participants-item__display-name to something else, my bot just breaks. To feel safe running my bot in production, I would want to find more robust indicators of the elements that the bot interacts with, and to establish fallbacks and alerting if these indicators weren’t found.

Zoom organizational restrictions create another whole category of failures. Some organizations disable captions entirely, which kills my bot's core functionality. Captions can also be turned off on an account-level basis, or even within the meeting. Recall.ai actually has a full section of our docs devoted to all the possible settings that can prevent meeting captions from being enabled. Since I’m not capturing audio, I have no fallback transcription option available for these scenarios.

The resource requirements for browser automation also become prohibitive at scale. Each bot runs a full Chromium instance inside a Docker container, consuming approximately 500MB of RAM at minimum. Chromium was not built to run hundreds of instances simultaneously on a single machine. You'll quickly encounter system resource limits, leading to container failures and dropped meetings. Running this at scale requires sophisticated orchestration systems, health monitoring, and automated recovery mechanisms. This is another reason companies that need meeting bots at scale use an API like Recall.ai.

When bots inevitably crash during meetings, I would need comprehensive infrastructure to recover from this:

- Monitoring systems to detect and handle failed containers proactively

- Intelligent cleanup processes that distinguish between healthy and zombie instances

- Restart mechanisms with safeguards against creating duplicate bots in the same meeting

As you can tell, most of these challenges only start to rear their heads once you’re starting to scale. This represents a fundamental shift in complexity and required expertise. What began as a fairly straightforward browser automation task evolves into a difficult distributed systems challenge.

Final Thoughts

How to improve my meeting bot

Given the above, there is obviously a lot that I can improve about this bot. I thought it would be fun to list some extensions to the bot’s existing functionality that could make it more useful.

I have all this conversation data in real time, but what can I do with it?

Potential feature additions:

- Summaries for late joiners - Generate real-time meeting summaries for participants joining mid-conversation

- Interactive Q\&A - Enable chat-based queries like "What did we discuss about the budget?" and have an LLM analyze the relevant transcript segments

- Action item extraction - Automatically identify and track commitments made during the meeting

- Sentiment analysis - Monitor the meeting's tone and flag when discussions become heated or unproductive

However, if you are determined to take this proof-of-concept toward production, here are some essential improvements you'll need:

Infrastructure basics:

- Replace file storage with a proper database (PostgreSQL, MongoDB, or similar)

- Implement message queuing for reliable bot orchestration

- Add container orchestration with Kubernetes or ECS

Improve transcription quality:

- Introduce more robust deduplication to prevent all duplicate transcript fragments

- Capture raw audio streams instead of relying on Zoom's captions

- Integrate with professional transcription services (AssemblyAI, Deepgram, etc.)

Enhanced debugging capabilities:

- Store debug recordings and screenshots in the database for post-meeting error analysis

- Implement structured logging with correlation IDs for tracking issues

- Add comprehensive error reporting and alerting

- Create automated tests that continuously verify selector stability

The build vs. buy reality check

Having built my own bot from scratch, I finally understand what the core Recall.ai engineering team has been dealing with for years. Every weird popup, platform update, and edge case I encountered in this project? We've already hit it, debugged it, and built robust workarounds so our users never have to. This is why we spend so much time thinking about meeting bots. Not because we enjoy the pain, but because we'd rather deal with all this complexity once so that thousands of developers don't have to. It's the classic "build vs buy" decision, except now I've actually experienced both sides.

If you weren't completely put off by what you just read and want to experience this pain firsthand, all the starter code for my bot is freely available on Github.

Otherwise, if you want to build this exact same functionality without all the suffering, I can wholeheartedly recommend the Recall.ai API. You can sign up for a free account here and have your first bot recording and transcribing a meeting within minutes by following our quickstart guide.

Appendix

What about other options for getting transcripts from a Zoom meeting?

Meeting bots are not the only way to retrieve transcript data from a Zoom meeting, though there are many benefits to the bot form factor. For a comprehensive overview, check out this article on the different APIs to get transcripts of Zoom meetings.